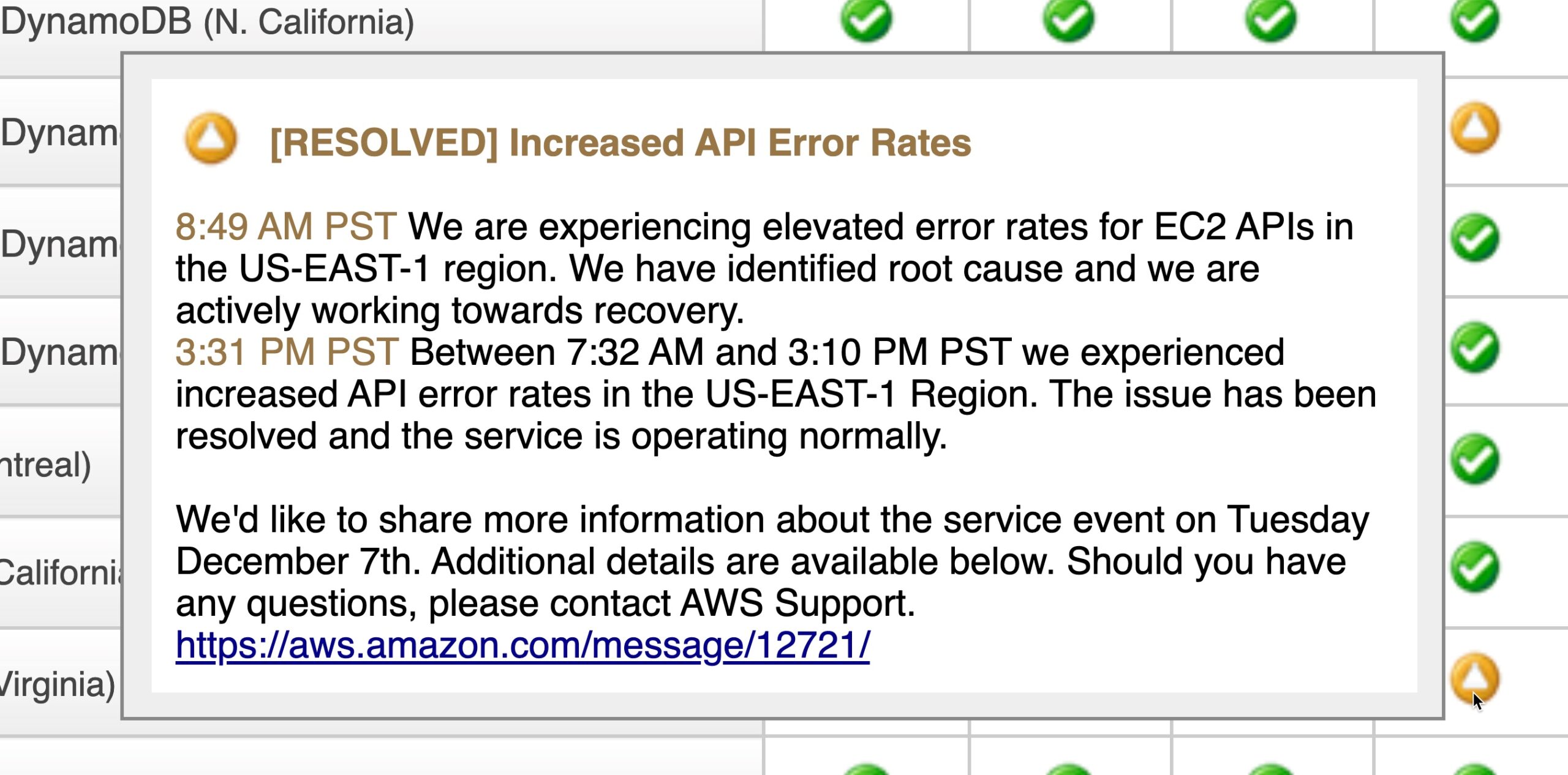

AWS had an outage on December 7th, 2021. It was a bad outage, and highlighted that even after 15 years of offering cloud services, even an experienced cloud provider can have a bad day. Companies across the world were affected. (I couldn’t even practice DuoLingo, but thankfully, they preserved my streak…). And while the operational failure (which could have been avoided, like most operational failures) was bad, what really caused trust problems was that Amazon took too long to post and then didn’t post fully. There are very few things as annoying as your internet site being down, going to the Amazon Web Services Status Page, and seeing “Service is operating normally” when you can see on your own screens it’s not – and it’s all over Twitter and it’s even hit media outlets. All while Amazon is mum.

When I led the RDS team at AWS, the motto of my operations leader, Brad Kramer and I was “First, Fast, Last.”. This meant that when things went badly, we tried to get a post onto the dashboard saying we were investigating it as fast as possible, that we updated frequently, even (especially?) if we’d made no progress, and we stayed yellow or red on the dashboard until every single customer affected by the outage had either had service restored or had been personally contacted by a member of my team with an ETA. Persistence, i.e. databases, live at the bottom of the application stack, and when they are down, the site is typically hard-down. As the years went by and AWS seemed to get more and more focused on the optics of the Status Page, I came under more and more pressure to be not first, not fast, and certainly not last. I fought that pressure, and to his credit, Andy Jassy listened, talked with his other leaders, and eventually agreed with me, though there were a number of hot conversations to get there.

I’m not the only one who is willing to forgive the mistake but not the poor communication of it.

The next day, I had a meeting of one of the two CTO clubs I am privileged to be part of. And the consensus was that indeed, a major part of the failure was poor comms from AWS. We of course shared guesses about what had actually happened, as technical folks are wont to do. However, the discussion very quickly turned to “Hey, we all know systems fail. Why are you whining? It looks like you’re not multi-regional or multi-cloud. Are you a real CTO or not?” I pointed out that this outage affected some services worldwide, such as Route53 and DNS propagation, so just being multi-region in AWS would not have protected them. Also that day, I was on a customer advisory board, and again, the talk was about how AWS needs to take their responsibility more seriously about the fact that they are the backing service for a significant portion of services on the Internet and give people as much information as possible as fast as possible so that they can communicate to their customers.

However, the measure of a company (or of a person) is not that they make a mistake but how they communicate the mistake and what steps they take to remediate it. The post-mortem (linked below) is AWS through-and-through – they explain enough of the details to illustrate what happened, and they are vocally self critical about how they handled it. In addition, they promise to fix those problems and they even give a timeline. At MongoDB, our software is distributed by nature and so we rode through the outage with very little impact. At the same time, our feedback to AWS has been that IAM, Route53, and the console APIs must be turned into Internet-Critical ™ services. (I just made up “Internet-Critical” 🙂 ). To me, this means that AWS must design at least those three services so that they literally have no known reason to not be available both for reads and for mutation operations. Worldwide. Every second of every day. Period.

AWS culture is consistent; our counterparts at AWS, all the way up to the top execs, have reached out, and in a completely non-defensive way have explained what happened, and are telling us how they intend to focus on fixing these problems so they never happen again. And my friends in AWS engineering back that up, telling me that not even a week later, they are being asked to reprioritize their backlogs more towards stability, availability, and observability.

AWS, the ball is in your court. We eagerly await you pushing into new frontiers of availability, for not only the good of your company but for the good of all the millions (billions?) of people who depend on your platform every day. .

Here’s the excellent post-mortem from AWS. Enjoy.